On-Chain Worlds With Terrain Generation

I gave myself an interesting challenge while designing the NFTs for Curta Golf. Curta is a fully on-chain competitive programming platform with some of the best smart contract engineers and security researchers, and Golf was the 2nd competitive format we added. The goal for players is to submit gas-optimized (i.e. "gas-golfed") solutions to challenges, and the "King of the Hill" NFT gets transferred to them if they submit the best solution (i.e. lowest gas usage).

There's a couple of reasons I decided to make Curta fully on-chain from novelty to my appreciation for fully on-chain projects, but the main one was because it'd maximize meritocratic competition. Anyone can see the challenges at the same time on-chain, and anyone can submit solutions with the same conditions. You can even go improve the current best solution right now. So, with this philosophy and other motivators such as provenance and openness, there was no question whether the corresponding NFT's metadata would be on-chain or off-chain.

Concept

My ideas on art concepts were immediately scoped by 2 things:

- I wanted to create something following the "golfing" theme.

- I wanted to learn a couple new techniques for making fully on-chain art.

When I think of golf, the first things I think of are golf courses and how every course is different with its own set of difficulties—just like how every Curta Golf challenge is different.

I've also been wanting to learn about terrain generation algorithms and on-chain (i.e. optimized) pixel art, so what if I made generative, pixel art "worlds"? That'd feel like each NFT was visually representing a "golf course" (i.e. the challenge) and require me to understand and implement two new techniques.

Sanity checking

I liked the generative worlds idea and concept, so I decided to sanity check it to see how hard it'd be or how long it'd take. Doing things fully on-chain obviously places some restrictions, but I try to never bound my thinking while ideating cause you can work around them 99% of the time. In fact, it often leads to many interesting and fun solutions along the way. Despite that, once I have a concept, I still have to perform some quick sanity checks to decide if I should dive deeper. This can often just be like 3 seconds of thinking or even just "vibe"-based (i.e. does it feel possible/easy), but it's an important chain of thoughts.

The process was mostly vibe-based for this project, probably because I had some feeling that there's no way terrain generation can be that complex for small outputs if games do it at a complexity and speed magnitudes higher. At the very least, terrain generation with constrained compute resources must be a very well-studied and well-documented area, so I should be able to figure something out—especially since we have "unlimited" gas budget because the art renderer is a read function.

Design

I played around with a few designs, but this is what I ended up shipping:

It has the generative world, and it clearly displays the important metrics of the leading solution.

Terrain generation

I began researching terrain generation first because it's the bulk of the logic, and I figured mapping the algorithm's output to the corresponding pixel data would be easy. Pretty quickly, I came across a great video on YouTube called "Minecraft terrain generation in a nutshell" by Henrik Kniberg.

I recommend Henrik's video for a deeper explanation, but the most important ideas are to:

- Use an algorithm like a 2D Perlin noise map for smooth random values.

- Map terrain using a combination of multiple 2D maps' values.

Smooth randomness

The main challenge with terrain generation is to smoothly transition between different biomes. If we naively generate worlds by randomly selecting tiles, the outputs won't be realistic. For example, if an "ocean" tile gets placed right next to a "desert" tile, that wouldn't make much sense. With a Perlin noise map, we can generate a grid of random values that transition smoothly to adjacent values.

Terrain selection

Once we have the random values, we need to map the value at each tile index to the terrain value (i.e. which biome to render). We can already kinda see how a single 2D Perlin map resembles some natural, geographic formation, like a topographic map showing elevation. By mapping values from a single map to a single property, we can build a simple framework to introduce more complex generation where we combine values from multiple maps to get smooth transitions between biomes.

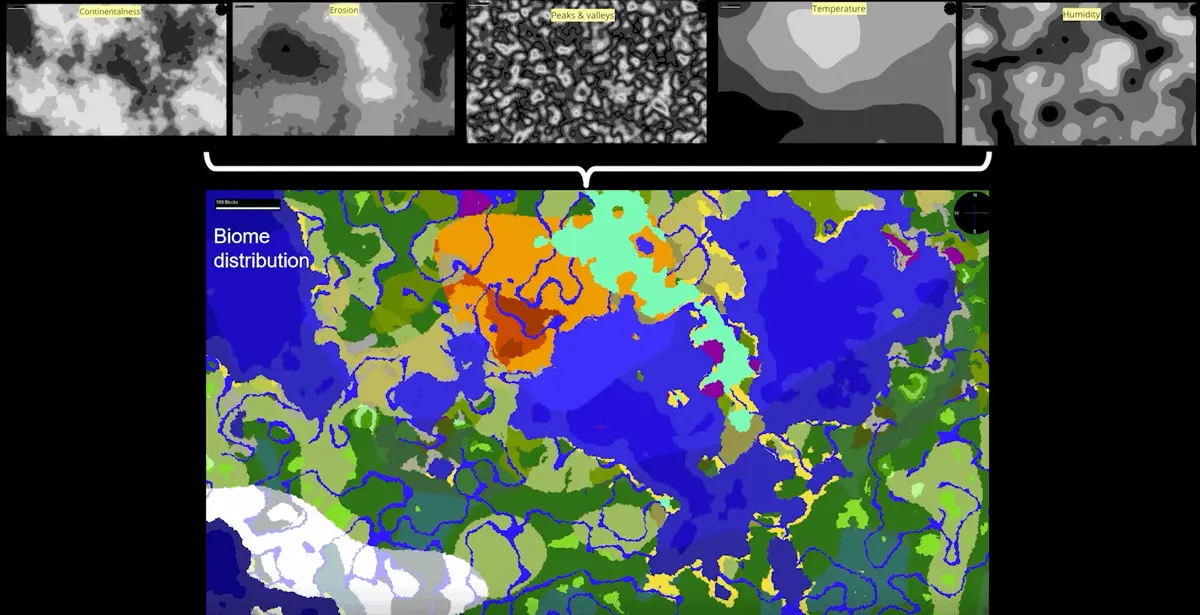

For example, in a game with complex terrain generation like Minecraft, we might want to take into account many properties such as continentalness, erosion, peaks and valleys, temperature, and humidity at each position. We can see from the image above that Minecraft's world generation algorithm achieves even more granularity by using different types of random maps for each property.

In my case, I just needed to select 1 biome value out of 11 at each tile position, so I went with just 2 2D Perlin maps: 1 for rainfall and 1 for temperature. Then, I came up with the following mapping to combine the 2 values:

The key point is that we now have a way to smoothly transition between terrains, taking into account both rainfall and temperature gradients.

Now, we can see how our algorithm will avoid placing contrasting biomes adjacently and generate more realistic outputs. For example, it's super unlikely that a "Desert" tile gets placed next to a "Wetland" tile because they're far apart in both dimensions of the mapping, which makes sense intuitively: a desert is super dry and hot, while a wetland is super wet and cool.

Implementing and prototyping terrain generation

At this point, I had a good idea of the solution, so I hacked together a Foundry script to output ASCII values (instead of the actual SVG pixel data URIs) to test and prototype it. I wanted to get a quick sense of the implementation, do a quick sanity check, and fiddle around with the mapping to get better generations.

The script is pretty straightforward, except for the last part, where I used a bitmap to determine which tiles to exclude in the final output for the hexagonal shape:

I ran it a couple of times, and it seemed to look good. Now, all that's left was to encode the pixel art data for each tile in an easy way for us to map the value at each position to the tile.

Pixel art

Before marking up each tile's image data in SVG, I thought about the best way to markup the overall SVG.

Immediately, I knew I wanted use the <use> element to render tiles in the world because many of them would be repeated.

Since there's many (160) tiles to render, it'd definitely be worth it in both gas savings (greatly reduces string operations) and final output size.

The "sanity check" here is <use> requires 44 to 48 characters to render a tile (depending on the coordinate), while even a super simple, optimized tile definition requires 100s.

By wrapping the tile definitions with a <g> container with visibility="hidden", we can easily and efficiently render tiles with <use> at any coordinate without worrying about the initial definitions being rendered:

This setup lets us change the rendered tile by changing the href value and change the position of the tile by changing the transform value.

Encoding the tile definitions

There's a lot of room for optimization with encoding each tile's pixel data by layering rectangles of varying dimensions and colors. For example, if there's a 3×4 block of the same color, it'd be better to render a single 3×4 rectangle, as opposed to 12 1×1 squares.

It starts to get really tricky to find the optimal markup once you have multiple colors because you can start to layer them in a particular order to render lower levels with fewer rectangles. A simple example: consider a 3×4 red block with a 1×1 blue square somewhere in the middle. Instead of rendering the blue square first and 4 red rectangles around it, it'd be more efficient to render the red block as a single 3×4 rectangle and then render a 1×1 blue square over it.

See this helper spreadsheet I used while marking up the tile definitions. Note: I eyeballed the whole process until it felt optimized enough instead of finding some optimal algorithm. If you figure out a solution, I'd love to hear!

Mapping terrain generation to pixel art

After I encoded all the tile definitions, I took the prototyping script and adapted it to generate the actual SVG. There's a couple of fun tricks I used in the final implementation, but otherwise, it's as straightforward as it sounds. I thoroughly commented everything as usual, so I recommend giving the source code a read.

Conclusion

Learning about something like terrain generation feels super cool because it's always felt like black magic to me, and I now feel like I have some base-level understanding. It also feels nice because one of my earliest encounters with terrain generation was with Minecraft, and it feels like I've come full circle by its source code teaching me how it works.

The whole "research" and building process described in this post was done in like a super fast, 1-day sprint, but I wanted to try something different and explain more of my thought processes; I've been wanting to write about more personal things, and while this post isn't that, I hope it displays some personality by showing the way I think.